-

Services

-

Practice Areas

- Banking and Finance

- Capital Markets

- Corporate M&A

- Dispute Resolution

- Employment and Labour

- EU and Competition Law

- Healthcare, Life Sciences & Pharmaceuticals

- Intellectual Property

- Projects and Energy

- Public Law

- Real Estate and Tourism

- Responsible Business

- Tax

- Technology, Media and Telecommunications

-

Sectors

- Agribusiness

- Banking and financial institutions

- Distribution and retail

- Education

- Energy and natural resources

- Government and public sector

- Healthcare, life sciences and pharmaceuticals

- Infrastructure

- Insurance and pension funds

- Manufacturing

- Mobility, transport and logistics

- Real estate and construction

- Social economy

- Sports

- Tourism and leisure

- Desks

- Buzz Legal

-

Practice Areas

-

People

-

Knowledge

-

Newsletter SubscriptionKeep up to date

Subscribe to PLMJ’s newsletters to receive the most up-to-date legal insights and our invitations to exclusive events.

-

-

About Us

-

Apply hereWe invest in talent

We are looking for people who aim to go further and face the future with confidence.

-

- ESG

-

Services

-

Practice Areas

- Banking and Finance

- Capital Markets

- Corporate M&A

- Dispute Resolution

- Employment and Labour

- EU and Competition Law

- Healthcare, Life Sciences & Pharmaceuticals

- Intellectual Property

- Projects and Energy

- Public Law

- Real Estate and Tourism

- Responsible Business

- Tax

- Technology, Media and Telecommunications

-

Sectors

- Agribusiness

- Banking and financial institutions

- Distribution and retail

- Education

- Energy and natural resources

- Government and public sector

- Healthcare, life sciences and pharmaceuticals

- Infrastructure

- Insurance and pension funds

- Manufacturing

- Mobility, transport and logistics

- Real estate and construction

- Social economy

- Sports

- Tourism and leisure

- Desks

- Buzz Legal

-

Practice Areas

-

People

-

Knowledge

-

Newsletter SubscriptionKeep up to date

Subscribe to PLMJ’s newsletters to receive the most up-to-date legal insights and our invitations to exclusive events.

-

-

About Us

-

Apply hereWe invest in talent

We are looking for people who aim to go further and face the future with confidence.

-

- ESG

Informative Note

AI Act: Commission guidelines on high-risk systems

20/06/2025The European Commission recently launched a stakeholder consultation on on the implementation of the AI Act’s rules on the classification of artificial intelligence (AI) systems as high risk. The purpose of the consultation is to collect contributions on:

The European Commission recently launched a stakeholder consultation on on the implementation of the AI Act’s rules on the classification of artificial intelligence (AI) systems as high risk.

Classification of AI systems as high-risk

- Practical examples of AI systems that can be classified as high risk

- Questions on the rules for classification of AI systems as high- risk pursuant to Article 6 and Annexes I and III of the Artificial Intelligence Act [1] (AI Act)

Proposal for Commission guidelines on Article 6 of the AI Act (Guidelines)

- Issues to be clarified in the Commission Guidelines on the classification of AI systems as high risk • Future guidelines on the requirements and obligations that high-risk AI systems must meet

- Clear definition of the responsibilities of providers, deployers and others along the AI value chain

CLASSIFICATION OF SYSTEMS IN THE AI ACT:

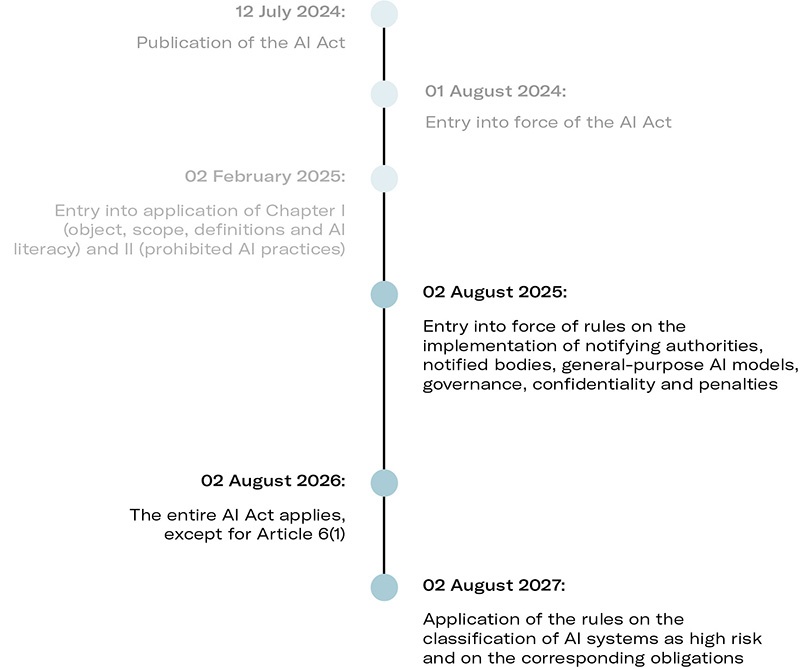

The AI Act came into force on 1 August 2024 to establish harmonised rules for the use of coherent, trustworthy and human-centric artificial intelligence systems.

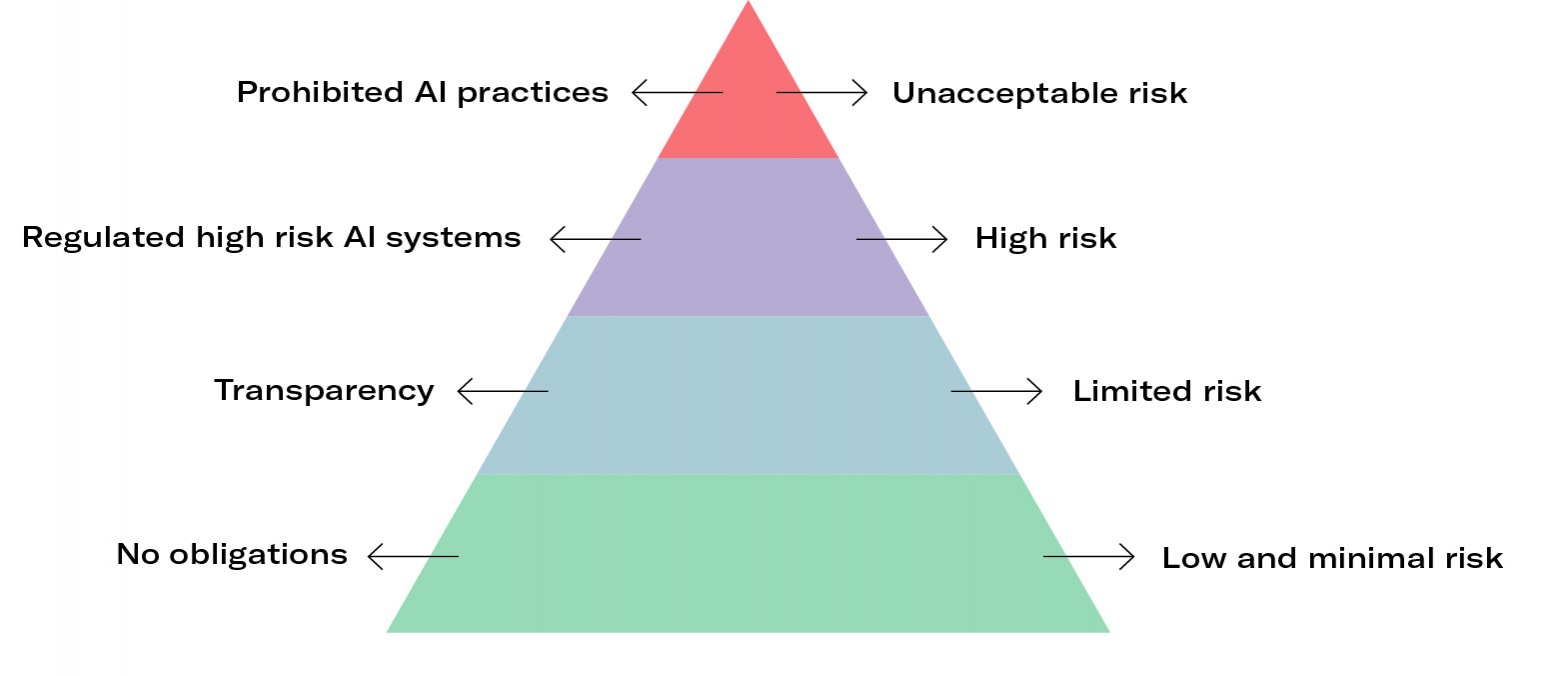

The classification of AI systems follows a risk-based approach [2], especially with regard to the high-risk systems provided for in Chapter III of the AI Act.

The figure below illustrates the various levels of risk classification, including AI systems whose use is not permitted because they are considered prohibited AI practices, pursuant to Article 5 of the AI Act [3].

The European Commission should publish guidelines on the practical application of the Act by February 2026, in particular those relating to the classification of AI systems as high risk.

Data source: European Comission

The AI Act states that high- risk systems can only be used if they do not pose an unacceptable risk to people’s health, safety or fundamental rights. These systems are divided into:

- AI systems used as safety components of products or products referred to in Annex I [4]

- AI systems that are covered by Annex III of the AI Act and which pose significant risks to health, safety or fundamental rights [5]. These systems are not considered to be of high risk where they do not pose a significant risk [6]

OBJECTIVE OF THE GUIDELINES PROPOSED BY THE COMMISSION:

According to the AI Act, the European Commission should publish guidelines on the practical application of the Act by February 2026, in particular those relating to the classification of AI systems as high risk[7].

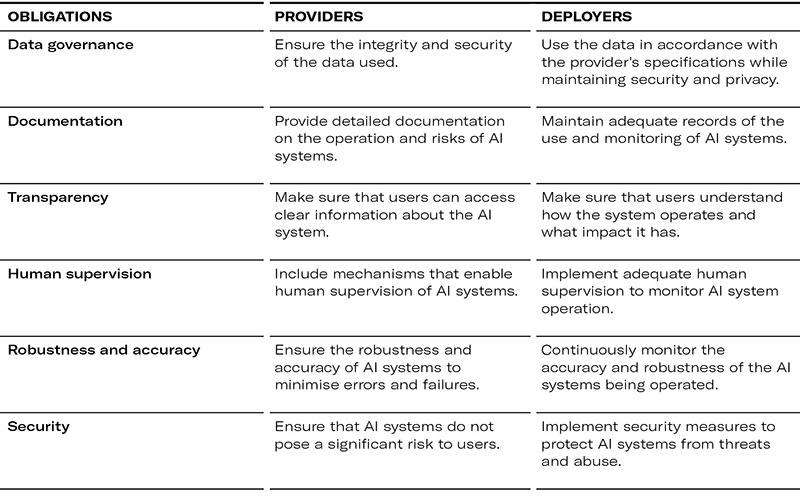

In addition, the Commission should draw up guidelines on legal requirements and responsibilities of operators throughout the value chain[8]. The Guidelines aim to:

- Provide practical examples and answer questions about the classification of AI systems as high risk, as referred to in Articles 6(1) and (2) and Annexes I and III of the AI Act

- Support providers and deployers in understanding and complying with the applicable requirements and obligations relating to high-risk AI systems, such as:

The consultation promoted by the European Commission closes on 18 July 2025, with the Guidelines to be published by 2 February 2026. The rules on the classification of systems as high risk, as set out in Article 6(1), will come into effect on 2 August 2027.

APPLICATION OF AI ACT – WHERE ARE WE?

[2] See Recital 26 of the AI Act.

[3] According to Recital 28 of the AI Act, the use of AI systems for manipulative, exploitative, or social control practices is prohibited under EU law, as these practices do not align with EU values. However, there are exceptions for their use in law enforcement situations, such as biometric identification systems. The use of such systems is subject to exhaustive and restrictive regulation.

[4] Article 6(1) of the AI Act.

[5] Article 6(2) of the AI Act.

[6] Article 6(3) of the AI Act. This article applies to systems that perform narrow procedural tasks; improve the outcome of previous human activities; detect decision-making patterns without significantly replacing or influencing human assessment; and perform preparatory tasks in the context of relevant assessments. Examples of AI systems that are exempt from being considered high risk include: (i) interactive platforms and virtual tutors, provided they do not manipulate behaviour or create harmful dependency; (ii) support robots for the elderly and people with disabilities that assist with daily activities without replacing human assessment; (iii) tools that help individuals integrate into new communities or the labour market without significantly influencing critical decisions.

[7] Article 6(5) of the AI Act.

[8] Article 96(1)(a) of the AI Act.